Connected autonomous vehicles make intersections safer

BY ERIK WIRTANEN

Originally published July 14, 2021.

Autonomous vehicles are becoming increasingly common across the United States and with them come safety concerns and mixed public perceptions about the self-driving automobiles. One of the major worries focuses on the places where the majority of traffic accidents occur — roadway intersections.

According to the Federal Highway Administration, more than 50% of the combined total of automobile crashes that result in fatalities and injuries occur at or near intersections. A study by the Insurance Institute for Highway Safety determined that self-driving cars could eliminate around 40% of crashes, but the goal is to devise an even safer solution.

A project by researchers in the Ira A. Fulton Schools of Engineering at Arizona State University and Carnegie Mellon University aims to improve intersection safety among connected autonomous vehicles, or CAVs, and distributed real-time systems. Distributed real-time systems are a collection of autonomous computers connected through a communication network that sends messages back and forth in real-time. A common example of this type of system is the one that enables online seat selection for a movie theater.

“Normally autonomous vehicles are ‘isolated’ in the sense that they do not exchange information with other autonomous vehicles,” says Aviral Shrivastava, an associate professor of computer science and engineering in the School of Computing and Augmented Intelligence, one of the seven schools in the Fulton Schools. “They just sense their surroundings all by themselves and make their driving decisions independently. All existing autonomous vehicles are isolated AVs.”

Shrivastava explains that CAVs can exchange information with each other, allowing them to know much more about their surroundings and to travel with greater confidence. As a result of communication between vehicles, CAVs can potentially drive faster and be safer than current AVs.

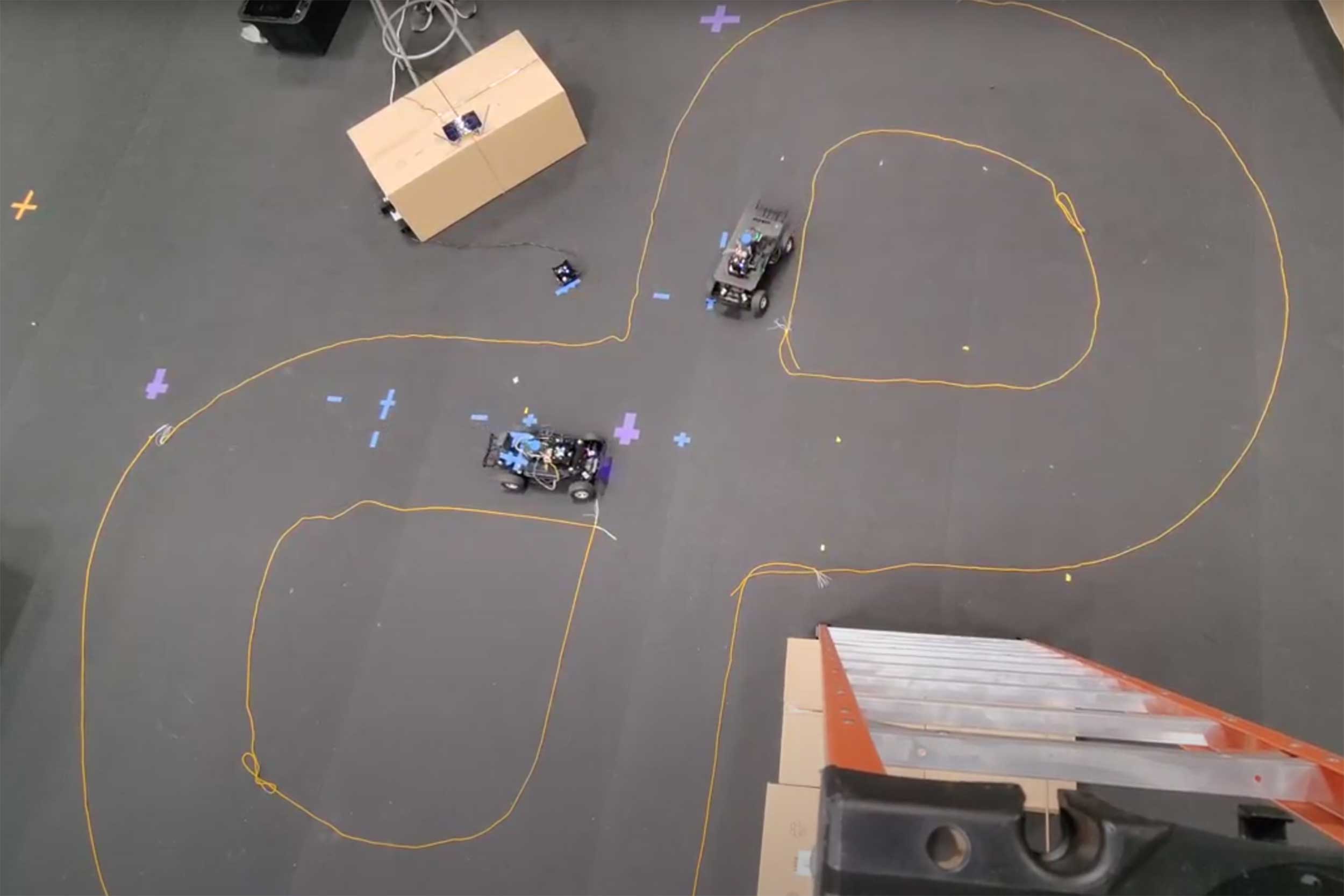

In this lab demo, camera pointed at the intersection functions as a sensor and verifies the position of the vehicle requesting entry into the intersection as well as the positions of what the vehicle is seeing. Video courtesy of Edward Andert

Students in Shrivastava’s lab have been working to coordinate distributed real-time systems for CAVs. Their project was selected for entry into the student design competition at the 2021 CPS-IoT Week virtual conference. The event brings together researchers from the cyber-physical systems and Internet of Things fields for a series of five conferences.

The team of students developed an algorithm to manage traffic at an intersection through which autonomous vehicles using a time-sensitive programming paradigm are passing. The system enables programmers to deploy their entire program at a macro-level as one application. The team’s compilation methodology algorithm automatically then breaks down the application into parts that need to be executed on the different components of the computing system.

“We took this project and ported it to run on a programming language called TTPython, or TickTalk Python,” says Edward Andert, a computer engineering doctoral student in the Fulton Schools and one of the official team members in the competition.

TTPython is a new Python-like programming language being developed by Shrivastava and his CMU colleagues as a part of a prestigious National Science Foundation Cyber-Physical Systems award. TTPython is a “macro” programming language that allows programmers to express the functionality of the entire distributed system and specify the timing requirements among the different events in the application.

The student team won second place at the competition with their presentation “One Program to Rule the Intersection.” In addition to Andert, the team featured doctoral students Reese Grimsley and Eve Hu from Carnegie Mellon along with Ian McCormack, an undergraduate student from the University of Wisconsin-Eau Claire who interned at Carnegie Mellon. Bob Iannucci, an electrical and computer engineering professor at Carnegie Mellon, was the faculty advisor of the project for the competition.

The competition’s challenge was to make an interesting edge computing application using the Nvidia Jetson platform. Edge computing moves some storage and computing resources out of a central data center and closer to the source of the data. This allows processing and analysis to be done where data is generated instead of transmitting raw data to a central location.

The project involves the use of two 1:10 scale connected autonomous vehicles, each equipped with an onboard LIDAR camera. The vehicles drive in a figure-eight pattern, repeatedly arriving at an intersection where they request entry by sending signals about their position and their sensor readings, and then are given a trajectory to follow through the intersection.

“This programming language is a data flow language that deals with the timing, scheduling and distribution of code to the devices in a real-time distributed system,” Andert says. “The TickTalk ported version worked better than our original application and we saw a significant reduction in timing problems and smoother operation as a result of porting the application over.”

The algorithm the team created improves the safety of autonomous vehicles in several ways. Primarily, it requires vehicles to send their sensor information, along with a request to enter the intersection — a process that has not really been done before as part of an intersection.

A camera is pointed at the intersection that functions as a sensor. The camera verifies the position of the vehicle requesting to enter the intersection as well as the positions of what the vehicle is seeing. If more than one CAV is requesting entry into the intersection, its data is also collected and the routes of the CAVs are mapped and monitored to ensure the routes are followed precisely.

“An example of this is if the vehicles do not receive a response from the roadside intersection computer that provides a route within a certain time period,” says Andert, “the vehicle will change its route plan and stop before it reaches the intersection.”

Shrivastava and his team are continuing the development of the system in his Make Programming Simple Lab. The collaboration includes work by Mohammad Khayatian, now an assistant professor at San Jose State University who graduated from ASU this summer with a doctoral degree in computer engineering.

It will be a while before CAVs are out on the roads, but research such as this project enables a future of increased safety and efficiency. The ability of CAVs to communicate with infrastructure and other vehicles has the possibility to significantly decrease the number of collisions attributed to human error and can help transportation agencies better manage traffic flow and emergencies.

Fulton

Researchers

Related Stories

Simulating a sustainable future

ASU doctoral student’s award-winning work simulating the local food-energy-water nexus can help guide policymakers

Silicon in the Valley: Creating opportunities to benefit Arizonans

ASU is fueling a semiconductor revolution to improve U.S. advanced manufacturing capabilities

Engineering Dean Kyle Squires is appointed vice provost

The new position acknowledges Squires’ role in reshaping engineering education at ASU and how that effort aims to expand in the future

Nine ASU Engineering faculty earn 2021 NSF CAREER Awards

Eight ASU Engineering faculty members have received NSF Faculty Early Career Development Program (CAREER) Awards