Closing the gap for real-time data-intensive intelligence

A NSF CAREER Award funds novel solution to speed up inferences in computer databases

Eleven faculty members in the Ira A. Fulton Schools of Engineering at Arizona State University have received NSF CAREER Awards in 2022.

The online world fills databases with immense amounts of data. Your local grocery stores, your financial institutions, your streaming services and even your medical providers all maintain vast arrays of information across multiple databases.

Managing all this data is a significant challenge. And the process of applying artificial intelligence to make inferences, or apply logical rules or interpret information, on such data can be urgent, especially when delays known as latencies are also a major issue. Applications such as supply chain prediction, credit card fraud detection, customer service chatbot provision, emergency service response and health care consulting all require real-time inferences from data being managed in a database.

The current lack of support for machine learning inference in existing databases means that a separate process and system is needed, and is particularly critical for the certain applications, like the ones mentioned above. The data transfer between two systems significantly increases latency and this delay makes it challenging to meet the time constraints of interactive applications looking for real-time results.

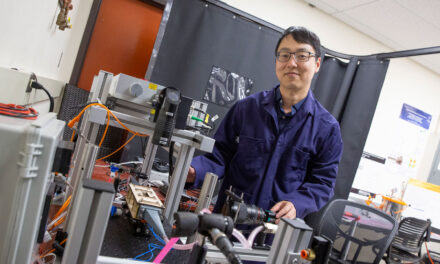

Jia Zou, an assistant professor of computer science and engineering in the Ira A. Fulton Schools of Engineering at Arizona State University, and her team of researchers are proposing a solution, that, if successful, will greatly reduce the end-to-end latency for all-scale model serving on data that is managed by a relational database.

“When running inferences on tens of millions of records, the time spent in moving input-output data around could be 20 times more than the inference time,” Zou says. “Such increase in latency is unacceptable to many interactive analytics applications that require making decisions in real-time.”

Zou’s proposal, “Rethink and Redesign of Analytics Databases for Machine Learning Model Serving,” garnered her a 2022 National Science Foundation Faculty Early Career Development Program (CAREER) Award.

Zou’s project aims to bridge the gap by designing a new database that seamlessly supports and optimizes the deployment, storage, and serving of traditional machine learning models and deep neural network models.

“There exist two general approaches to transform deep neural networks and traditional machine learning models to relational queries so that model inferences and data queries are seamlessly unified in one system,” Zou says. “The relation-centric approach breaks down each linear algebra operator to relational algebraic expressions and run as a query graph consisting of many fine-grained relational operators. The other approach is to encapsulate the whole machine learning model into a coarse-grained user-defined function that runs as a single relational operator.”

The major difference between the two approaches is that the relation-centric approach scales to large models but incurs high processing overheads, while the user–defined, function-centric approach is more efficient but cannot scale to large models.

Zou’s proposed solution is to dynamically combine both approaches by adaptively encapsulating subcomputations that involve small-scale parameters to coarse-grained user-defined functions and mapping subcomputations that involve large-scale parameters to fine-grained relational algebraic expressions.

In order to accomplish that, Zou, who teaches in the School of Computing and Augmented Intelligence, one of the seven Fulton Schools, proposed a two-level Intermediate Representation, or IR, that supports the progressive lowering of all-scale machine learning models into scalable relational algebraic expressions and flexible yet analyzable user–defined functions.

“Based on such two-level IR, the proposed accuracy-aware query optimization and storage optimization techniques introduce model inference accuracy as a new dimension in the database optimization space,” Zou says. “This means the model inferences and data queries are further co-optimized in the same system.”

Zou says that is critical and urgent to integrate data management and model serving in order to enable a broad class of applications that require data-intensive intelligence at interactive speed, such as credit card fraud prediction, personalized customer support, disaster response and real-time recommendations used on all types of apps.

“In the past 10 years, I have been focused on building data-intensive systems for machine learning, data analytics and transaction processing in both industry and academia,” Zou says.

Zou and her lab have also worked with potential industrial users such as the IBM T. J. Watson Research Center as well as multiple academic users.

In the future, she says this work will deliver new techniques to advance logical optimization, physical optimization and storage optimization of end-to-end machine learning inference workflows in relational database.

“We will also develop and open source a research prototype of the proposed system,” Zou says. “Moreover, the research results of the project will enhance and integrate educational activities at the intersection of big data management and machine learning systems.”

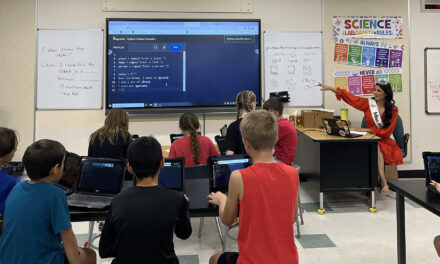

As part of the NSF CAREER Award, Zou’s work will also support a Big Data Magic Week activity for underrepresented K-12 students and refugee youths in Arizona. The activity will be used as a platform to prepare selected ASU undergraduate students for international research competitions, and be integrated with an ASU graduate-level course on data-intensive systems for machine learning.

“ASU provides a collaborative environment for machine learning-related research, through which we can easily identify the potential academic users of our research results,” Zou says. “The Fulton School of Engineering and SCAI provide great support to junior professors’ career development.”